22 измененных файлов с 1202 добавлено и 1273 удалено

BIN

flip_store/.DS_Store

+ 11

- 34

flip_store/README.md

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 1

- 1

flip_store/alloc/flip_store_alloc.c

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 1

- 1

flip_store/alloc/flip_store_alloc.h

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 4

- 3

flip_store/app.c

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 6

- 6

flip_store/application.fam

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 4

- 3

flip_store/apps/flip_store_apps.c

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 2

- 1

flip_store/apps/flip_store_apps.h

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

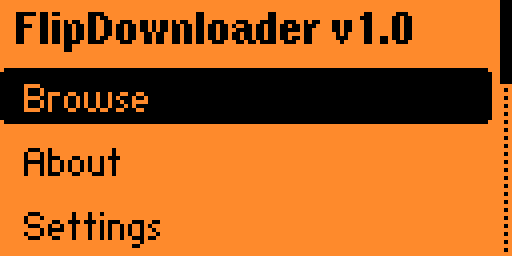

BIN

flip_store/assets/01-main-menu.png

+ 10

- 1

flip_store/assets/CHANGELOG.md

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 10

- 33

flip_store/assets/README.md

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 9

- 5

flip_store/callback/flip_store_callback.c

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 1

- 1

flip_store/callback/flip_store_callback.h

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 86

- 6

flip_store/firmwares/flip_store_firmwares.c

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 4

- 4

flip_store/firmwares/flip_store_firmwares.h

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 1

- 1

flip_store/flip_store.c → flip_store/flip_downloader.c

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 7

- 5

flip_store/flip_store.h → flip_store/flip_downloader.h

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 1

- 1

flip_store/flip_storage/flip_store_storage.h

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

Разница между файлами не показана из-за своего большого размера

+ 551

- 780

flip_store/flipper_http/flipper_http.c

+ 109

- 273

flip_store/flipper_http/flipper_http.h

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 383

- 113

flip_store/github/flip_store_github.c

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 1

- 1

flip_store/github/flip_store_github.h

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||